Seven months after Chinese startup DeepSeek rattled markets by introducing a powerful open-weight large language model—freely available for anyone to run or modify, and built for a fraction of the typical cost—OpenAI has followed suit. In an apparent bow to the competitive pressure, the US-based company released two open-weight models on August 5, marking another pivotal moment in the economics of artificial intelligence (AI).

Just a year ago, integrating generative AI into software products was expensive, unreliable, and largely experimental. Today, it’s becoming both practical and profitable. As AI model costs continue to fall, software companies are weaving the technology into more of their products to improve customer productivity and create differentiating features that can command premium prices.

Three forces are driving down AI costs: a venture capital–fueled race for scale, the rise of open-weight models, and rapid advances in the underlying hardware.

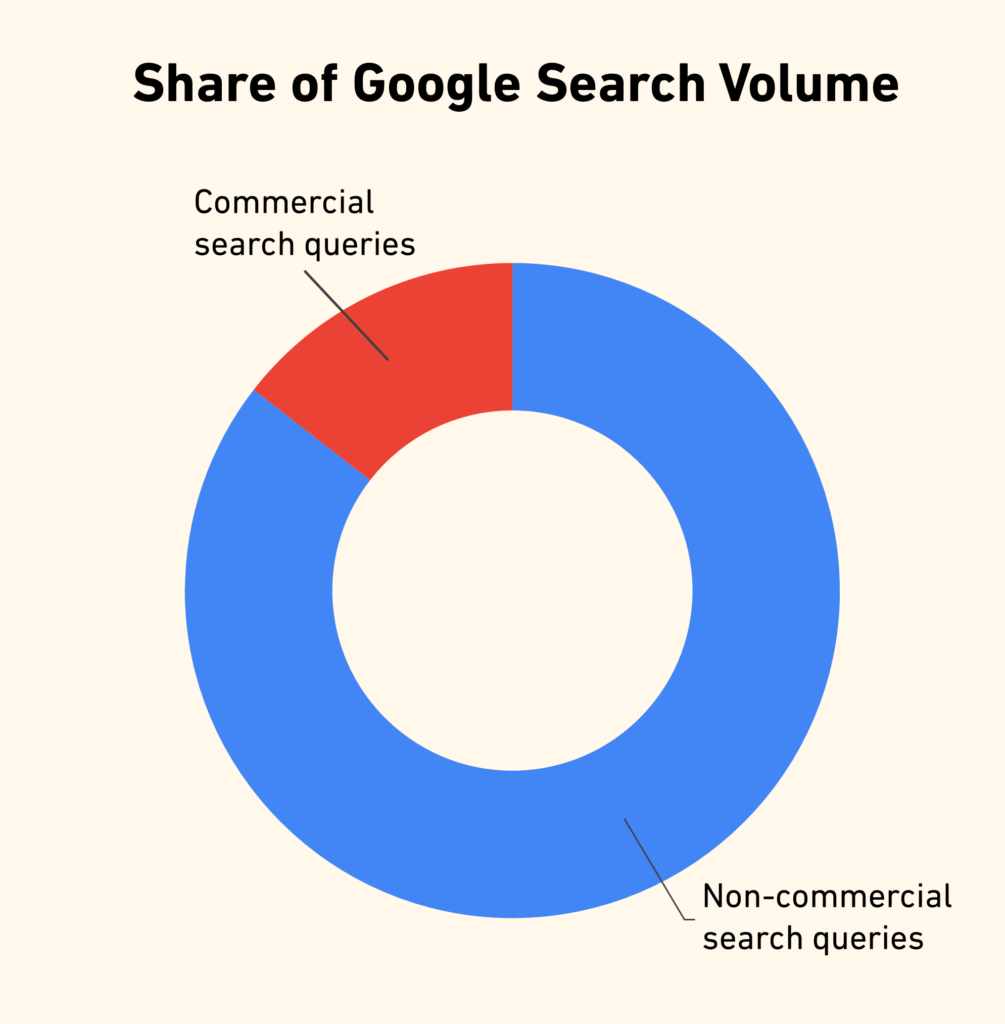

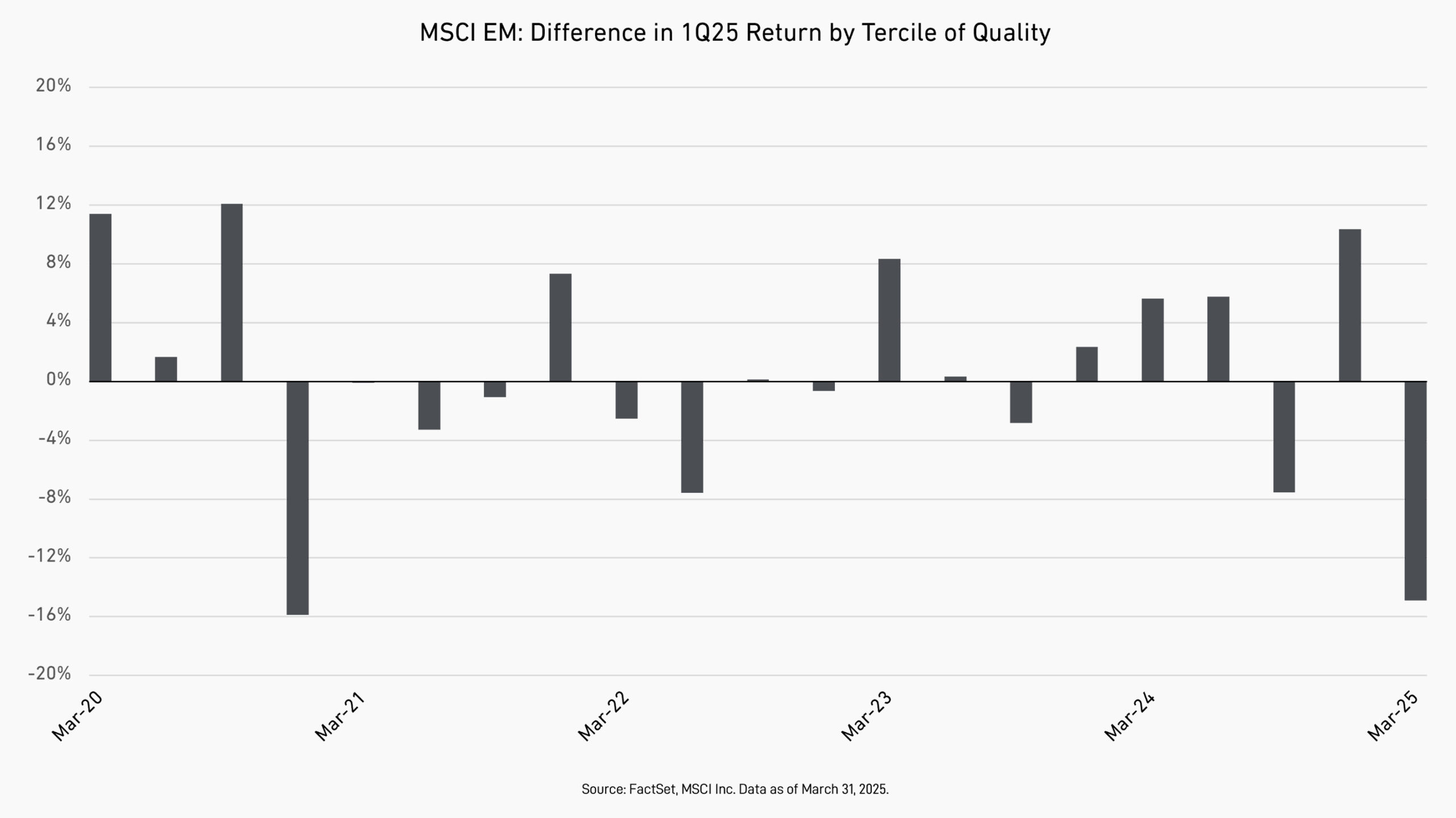

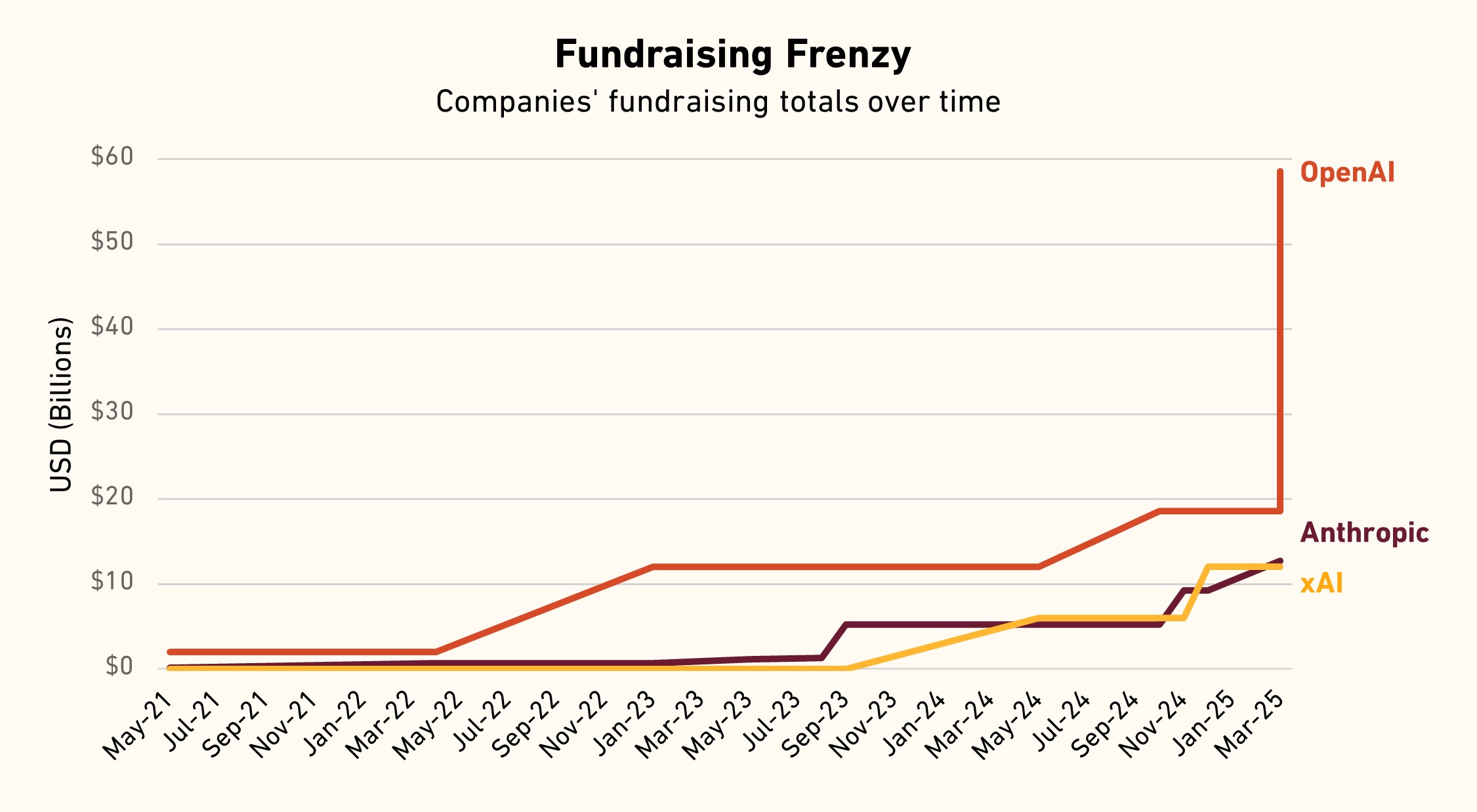

Large language models are the brain of AI software products—they interpret language, generate content, and automate reasoning. OpenAI, Anthropic, and xAI, with the aid of massive investment by venture capital firms, are among companies racing to build the most capable and efficient models to attract customers and win market share. Together, the three have raised an unprecedented US$80 billion in only a few years. (For context, a total of US$200 billion was raised across the broader venture capital industry in the US last year.) That capital is being used to invest aggressively in workers—in some cases paying over US$200 million for a single engineer—and in techniques that push their models to be faster, cheaper, and more powerful for users.

Source: Company press releases.

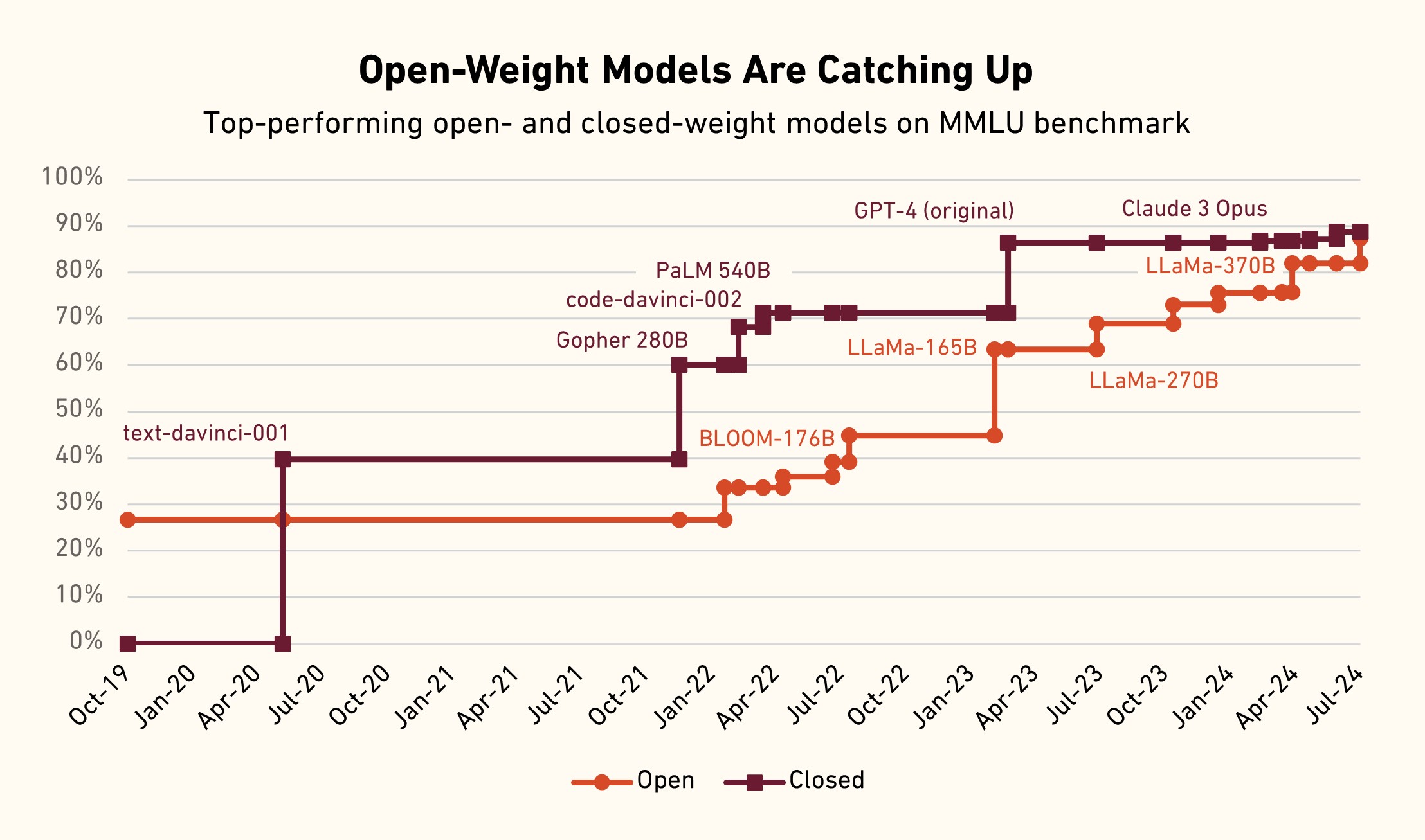

Partly spurred by the innovations from China’s DeepSeek, there has been a Cambrian explosion in open-weight AI models in recent months. Unlike closed-weight models, users can customize open-weight models and run them on their own infrastructure. Although they are free for commercial use and redistribution, open-weight models increasingly rival closed-weight models in performance on popular benchmarks, such as the MMLU leaderboard, pressuring the ability of leading closed-model vendors to charge premium prices for their proprietary systems. OpenAI, the leading provider of closed-weight models that was once firmly committed to keeping its models proprietary, now appears to have shifted course.

Source: Epoch AI.

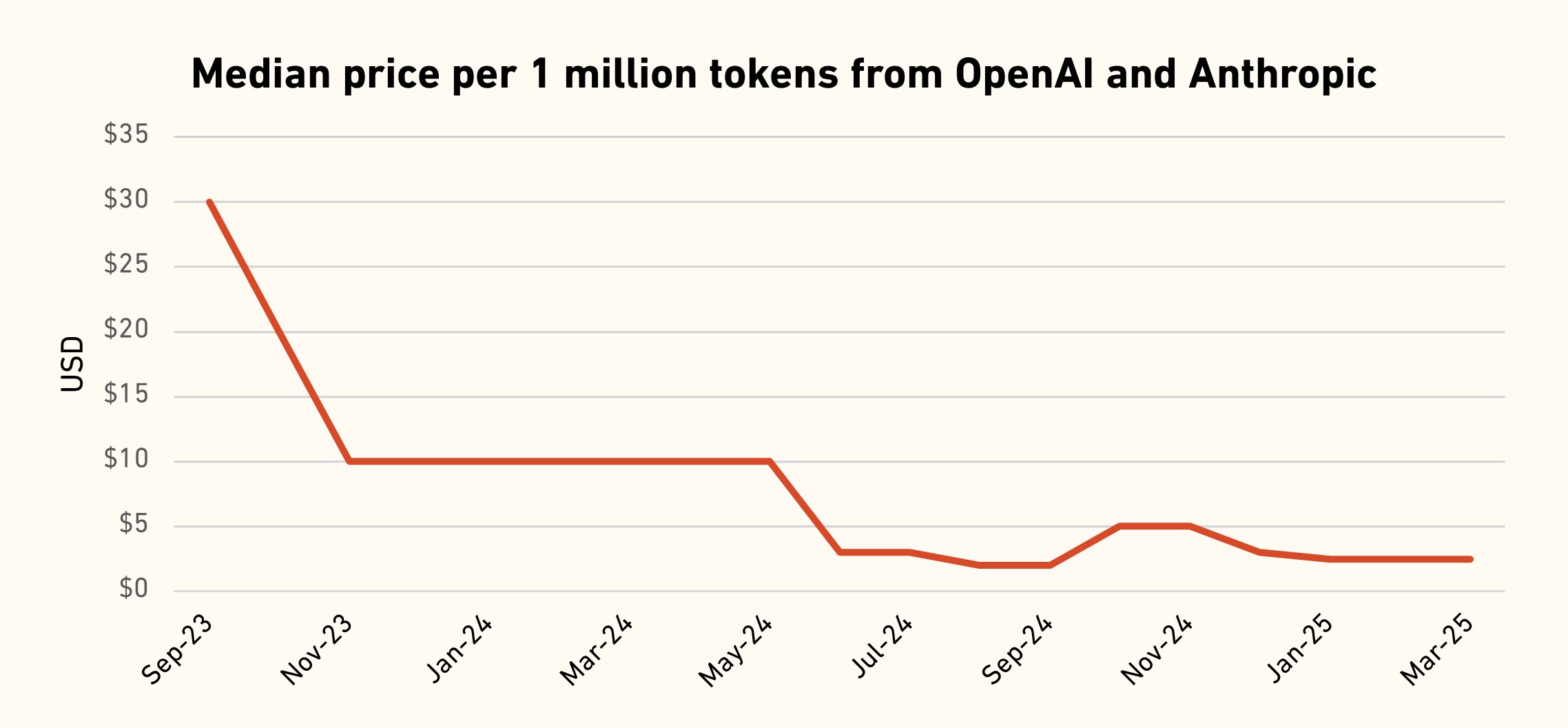

The key hardware that powers these models, graphics processing units (GPUs), is also rapidly getting cheaper on a cost-per-performance basis. Companies such as NVIDIA and AMD are releasing more powerful and specialized chips that reduce the cost of training and running AI models. NVIDIA’s latest Blackwell chip, for example, offers a thirtyfold improvement in speed over its H100 predecessor for AI use cases. These forces have combined to lower the cost of AI model tokens—the base unit of AI models’ input and output—by 75% in just one year. Features and products that were previously uneconomical are now viable. For example, in 2023, the leading workflow software vendor ServiceNow launched its popular ServiceNow Pro+ product that includes an AI-powered IT help-desk agent (and commands a 30% price increase relative to its Pro product).

Source: Ramp.

Technology cost deflation is not a passing trend: it has long shaped software development. Most recently, it was true for cloud infrastructure. If AI models are the brains of modern software, cloud infrastructure is the bones. Leading cloud-infrastructure providers such as Amazon Web Services and Microsoft Azure offer the basic building blocks, including storage, networking, and computing power, to stand up applications. Thanks to steady advances in chip technology, as long described by Moore’s Law, the cost of cloud storage and computing power has fallen to near zero.

Over time, this decrease in cloud computing costs has been passed on to software companies, whose gross margins average 72%, higher than every industry except real estate investment trusts. It’s lowered the cost to create new software products that improve customer retention and deliver better value. For example, it allowed ServiceNow to enter new markets including field service management and for Adobe to launch mobile versions of its popular Photoshop software and new products such as Adobe Express. All this high-margin innovation has led to strong returns for software stocks. As of the end of 2024, software accounted for 10% of the total market capitalization of the S&P 500 Index, more than doubling from 4% in 2014.

AI looks to be following a similar path as cloud computing: While AI model builders compete aggressively and technology pushes forward, it is their customers, the software providers, that stand to benefit.